A mechanism in a solo, cooperative, or competitive game in which the rules governing a non-player faction’s actions are both known to all players and able to be changed in response to player actions.

In a solo game or cooperative game, there needs to be some way to generate a challenge to engage the players. Games like solitaire create this by having a random deck that the player must work through to solve a puzzle (or not, as dictated heavily by the luck of the draw). That random deck is the AI of the game – it “controls” what the player must face at his or her next decision.

For this article, AI is the process for determining how the gamestate changes in response to human player’s choices. It is merely a set of rules governing how an abstract non-human opponent is “responding” to the human player.

When an AI is completely random, gameplay suffers – imagine playing Battleship where the AI doesn’t attempt to get additional hits once it gets a single hit on the aircraft carrier. After watching the AI randomly pick an illogical move, the game suddenly ceases to be fun because victory without challenge is meaningless. Similarly, imagine an AI playing Settlers of Catan that builds things based solely on a roll of a die; roll a 1, build a road; roll a 2 = build a town. A designer could come up with a ruleset that defines all AI actions with dice rolls, requiring no human decision-making or interpretation.

An alternative is to have an AI follows a strict set of rules that again require no human decision-making. For example, in Dominion, an AI might follow a strategy of purchasing Provinces (the highest VP card available) when able, and otherwise purchasing the highest value coin. However, when an AI is completely rules-based, gameplay suffers. An AI in which a player can predict exactly what will happen removes uncertainty, which is one of the greatest things about playing with actual human beings. Futhermore, playing optimally then requires that the player include the AI’s next turn as part of his deliberations, increasing analysis paralysis and draining the fun. Imagine playing Hangman with a person who just starts guessing at “a” until he loses or gets to “z” – perhaps fun once for novelties’ sake, but the word-picker can always win if he thinks through his word well enough.

Limited Variability Reprogrammable AI

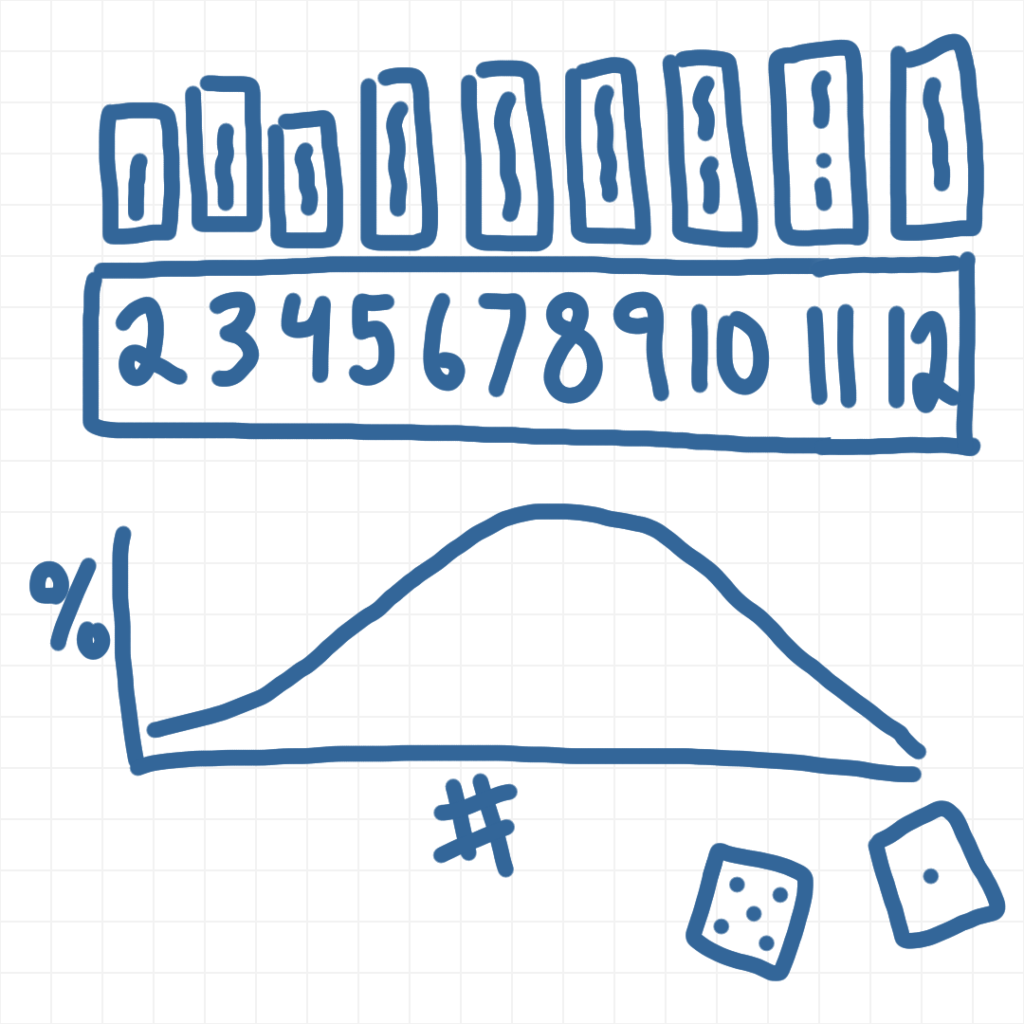

Here is a different way. Imagine an AI that follows an action that is randomly chosen from a defined set of possible actions, and with some of those actions more likely to occur than others. That is the Limited Variability part – the AI isn’t completely predictable, but a player has a pretty good guess over what might occur. The sum of two 6-sided dice is used to inject the variability. For now, what you have is a set of possible actions that an AI can take, with the action at spot 7 most likely to get rolled, and the two actions at spots 2 and 12 least likely to get rolled. This is an improvement over both rules-based and random in that the AI isn’t completely predictable but also isn’t likely to perform the “fringe” actions. However, those “fringe” actions may not always be a bad move, and the central moves may not always be good moves.

The Reprogrammable part is where it gets fun . Imagine that the AI’s different possible actions are printed on cards and placed next to a reference card with 11 different spots, numbered 2 through 12. Because these actions are printed on cards, they can be easily swapped, and what was the most likely action (in spot 7) can be swapped with another card to shift the apparent behavior of the AI.

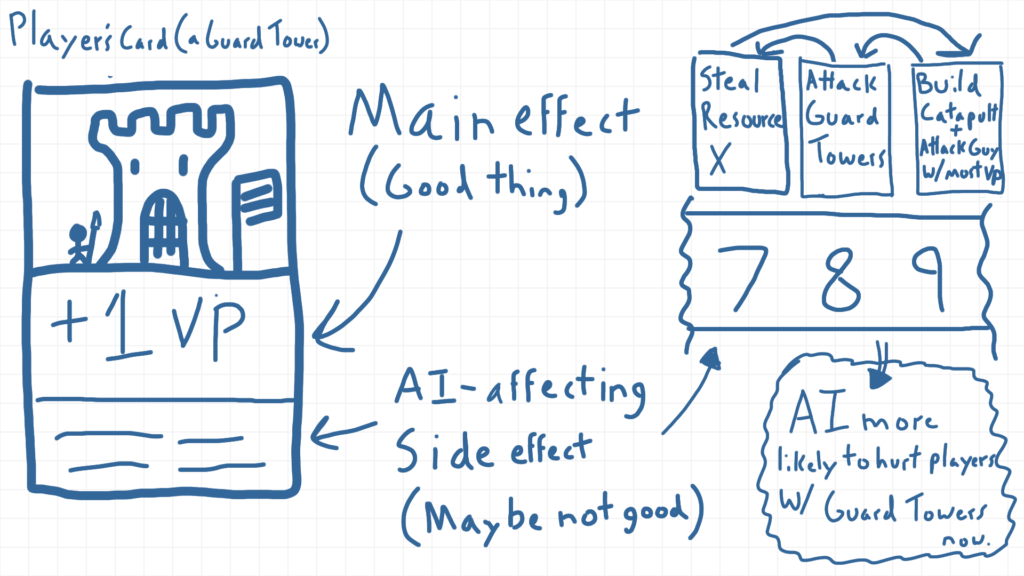

The fun part: designing the Reprogramming mechanic

1. Reprogrammed as a side effect of player actions. Perhaps players have to play cards on their turns to get closer to their win condition. One of the side-effects of these cards might be to reprogram a particular action of the AI to be either closer or further from the highest-probability spot at 7. For example, maybe building a guard tower for extra victory points has the side effects of increasing the AI’s “desire” to attack guard towers by moving that action into spot 7 (thematically, perhaps the AI feels threatened by the player’s increased focus on military, plus). As AI actions shift upwards and downwards, certain actions become more frequent in what feels like an intelligent shift in strategy to player choices.

“I’ll gain VP if I build my Guard Tower, but the the AI will be more likely to attack me in the future. Also, it will be less likely to steal Resource X, which my opponent needs to complete his Dark Temple”

- Reprogrammed as a main effect of player actions. Players may choose to spend their turn (and resources) shifting certain AI priorities up or down (perhaps the AI is a neighboring country and the reprogramming is accomplished via a diplomatic envoy). This method is probably better suited for a competitive game since the AI might be induced to negatively affect certain players more based on their particular strategy. If your opponent has a farm-heavy strategy, bribing the AI to prioritize attacking the player with the most farms becomes a smart move to make.

“Do I reprogram the AI to be more likely to hurt my opponents, or do I build my infrastructure to get closer to my win condition?”

- Reprogrammed based on rounds elapsed. After a certain number of rounds, actions are shifted based on a previously unseen schedule, perhaps as part of a story-driven cooperative campaign-style game. In this manner, completely new actions could be introduced at certain times, throwing the team of players against an adversary with unprecedented new powers. Perhaps around the halfway point, after the players have started marshaling their forces on the border of the Land of Evil, the AI suddenly changes, gaining the power of teleporting certain units into unprotected capitals of the players. The new teleportation action chunk gets added at a certain spot on the scale, replacing an older action, and the players now have to account for the possibility that a orcish assassin may suddenly begin wreaking havoc across the undefended countryside.